Hi there!😊 I’m Kairan Dou, welcome to my personal website! Feel free to just call me Kevin.

I am a senior undergraduate student majoring in Computer Science at Nankai University, and I have just completed my exchange program at the University of California, Berkeley.

This summer, I am working as a research intern at the both MIT Media Lab and Harvard Ophthalmology AI Lab under the joint supervision of Prof.Paul Liang and Prof.Mengyu Wang, where I focus on robotic manipulation. Since February 2025, I have been a Research Assistant at The University of Texas at Austin, working under the guidance of Prof. Philipp Krähenbühl, focusing on multimodal learning. Previously, I have conducted extensive research at the Visual Computing and Intelligent Perception(VCIP) Lab, advised by Prof. Xiang Li.

My current research interests lie in:

- Reinforcement learning for stability and alignment in VLA models

- Developing algorithms to improve the performance of VLA models in post-training

- RL-augmented language model search and semantic retrieval

I aim to develop embodied agents with the capacity for generalizable reasoning and long-horizon decision-making. My long-term vision is to advance the foundations of real-world intelligence through unified perception, control, and learning.

You can also reach me on WeChat at: Darkeyes-

🔥 News

- 2025.05: 🎉🎉 Our paper was accepted at FMEA Workshop @ CVPR 2025.

- 2025.02: 🎉🎉 I delivered an oral presentation at AAAI 2025 in Philadelphia.

- 2024.12: 🎉🎉 Our paper was accepted at AAAI 2025.

📝 Publications

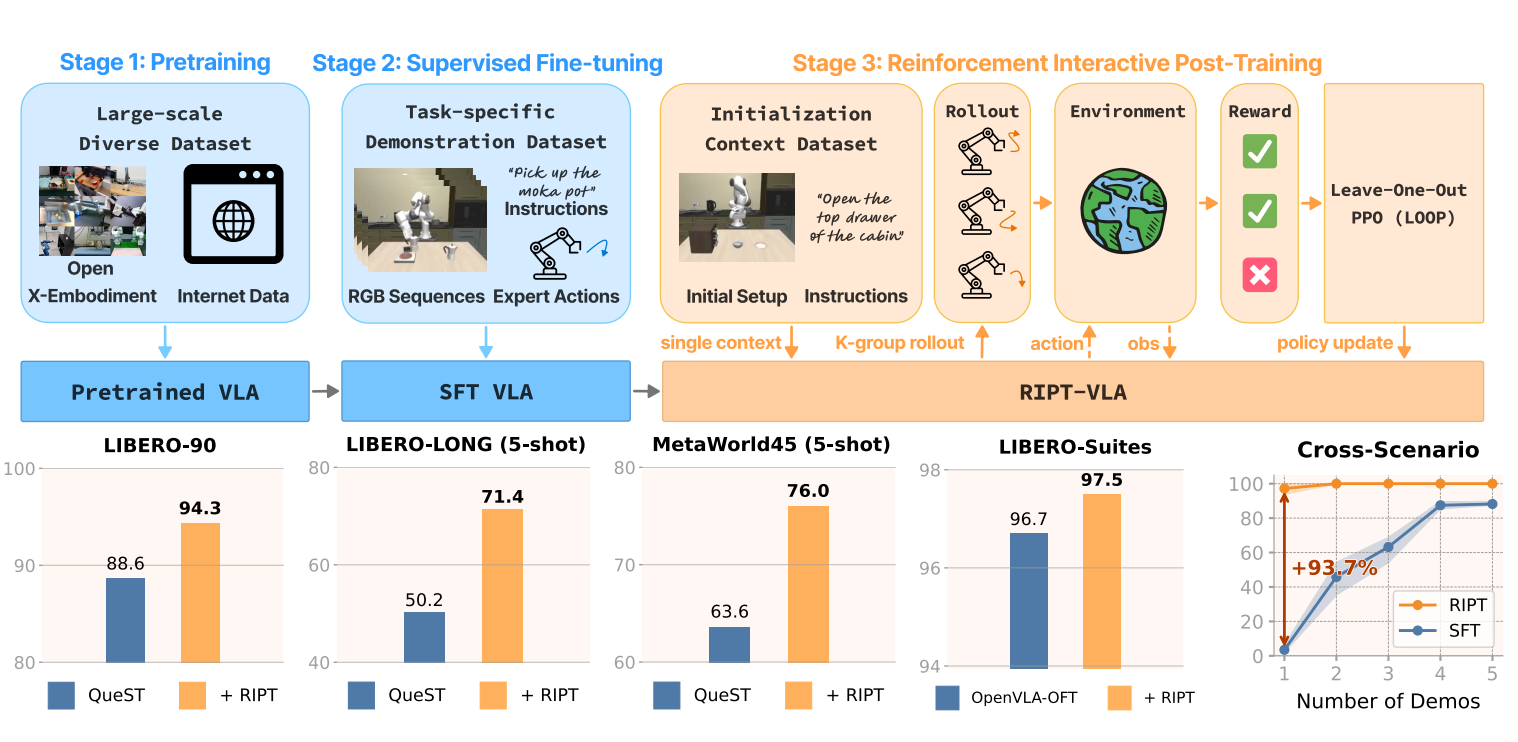

Interactive Post-Training for Vision-Language-Action Models

Shuhan Tan, Kairan Dou, Yue Zhao, Philipp Krähenbüh

- Introduces RIPT-VLA, a scalable third-stage reinforcement learning method for VLA models, enhancing performance through interactive training with sparse binary rewards.

- Achieves SOTA performance across diverse benchmarks, including LIBERO-90 (94.3%), LIBERO-LONG 5-shot (71.4%), MetaWorld45 5-shot (76.0%), and OpenVLA-OFT (97.5%).

- Employs dynamic rollout sampling and leave-one-out advantage estimation to significantly enhance generalization, stability, and effectiveness across challenging tasks and scenarios.

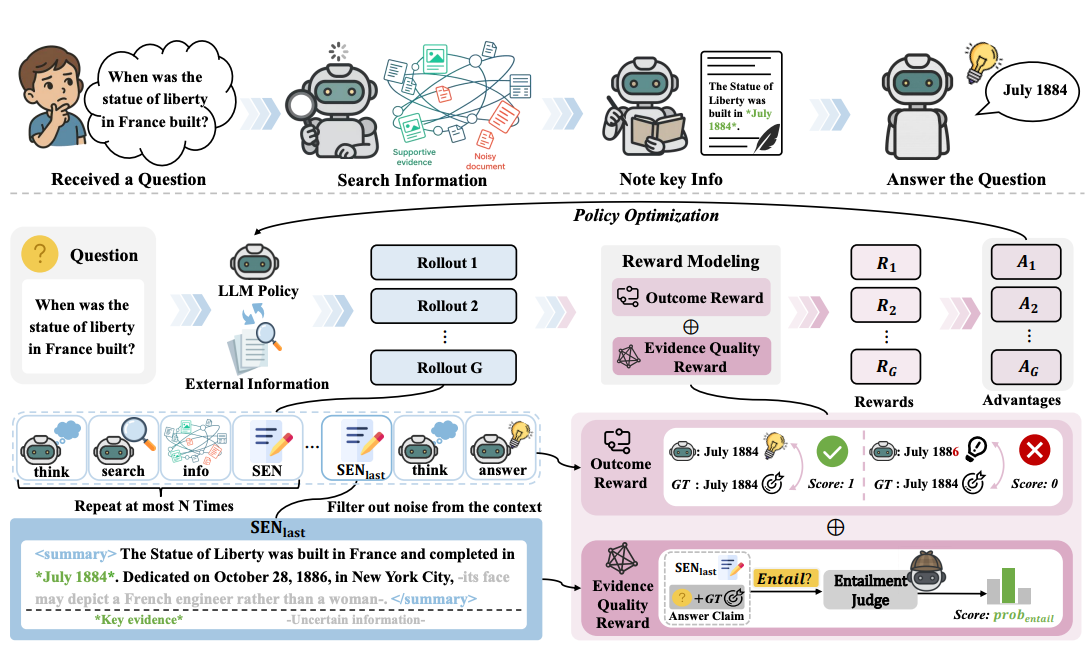

EviNote-RAG: Enhancing RAG Models via Answer-Supportive Evidence Notes

Yuqin Dai*, Guoqing Wang*, Yuan Wang*, Kairan Dou, Kaichen Zhou, Zhanwei Zhang, Shuo Yang, Fei Tang, Jun Yin, Pengyu Zeng, Zhenzhe Ying, Can Yi, Changhua Meng, Yuchen Zhou, Yongliang Shen, Shuai Lu

- Introduces EviNote-RAG, an agentic RAG framework that restructures the pipeline into a retrieve–note–answer process. It trains LLMs to generate Supportive-Evidence Notes (SENs) and leverages an entailment-based Evidence Quality Reward (EQR) to improve evidence selection and reasoning.

- Achieves state-of-the-art performance across in-domain and out-of-domain QA benchmarks, with notable relative F1 gains: +20% on HotpotQA (+0.093), +40% on Bamboogle (+0.151), and +91% on 2Wiki (+0.256).

- Employs structured note-taking and reward-guided filtering to mitigate noisy retrievals, enhance training stability, and improve generalization and efficiency, significantly outperforming CoT, prompt-based, and RL-based baselines.

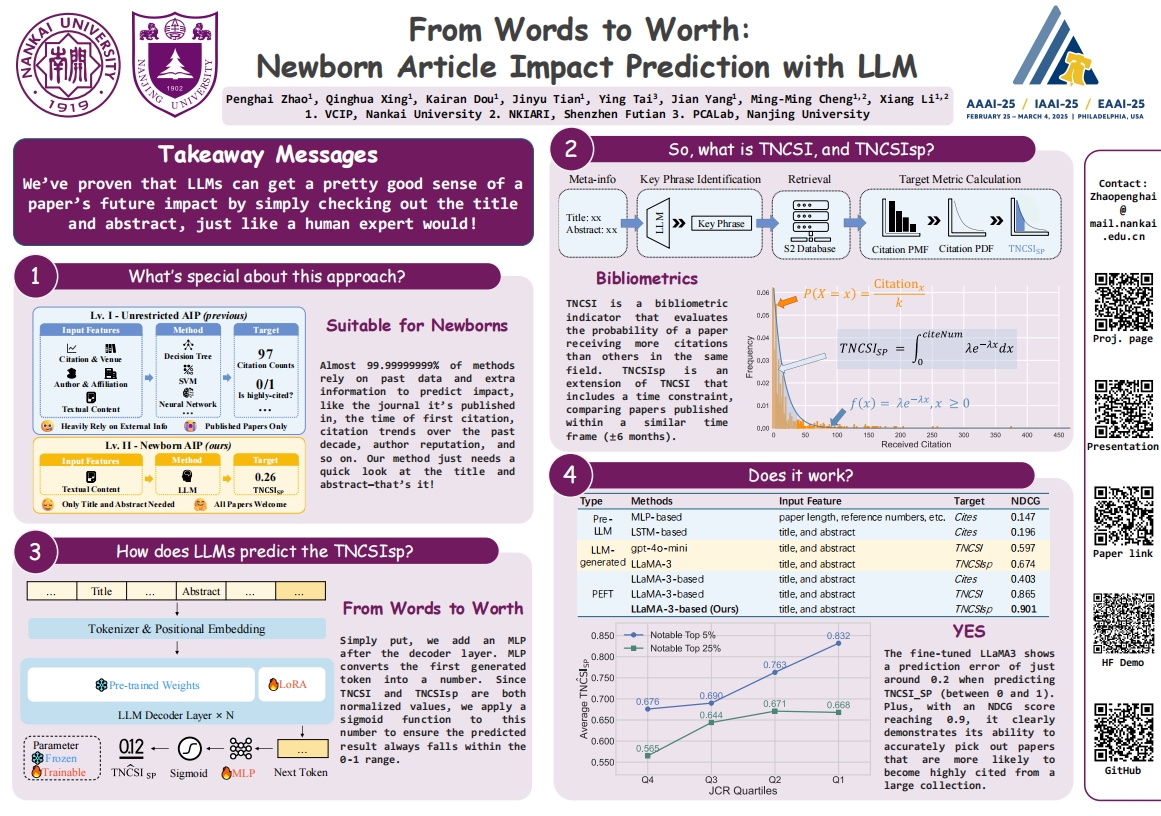

From Words to Worth: Newborn Article Impact Prediction with LLM

Penghai Zhao, Xinghua Xing, Kairan Dou, Jinyu Tian, Ying Tai, Jian Yang, Ming-Ming Cheng, Xiang Li

- Proposed the “Newborn Article Impact Prediction” (Newborn AIP) task and introduced the TNCSIsp metric, achieving an NDCG@20 score of 0.901.

- Constructed TKPD and NAID datasets, including over 12,000 samples for training and validation.

- Used LoRA to fine-tune and test 5+ large language models on server to evaluate prediction performance.

📖 Educations

01/2025-05/2025

Exchange Student

09/2022-06/2026

B.Eng. in Computer Science

💻 Internships

2025.06 – present

Research Intern (joint appointment)

2025.02 - present

Research Assistant

2024.06 - 2025.02

Research Assistant

💬 Projects

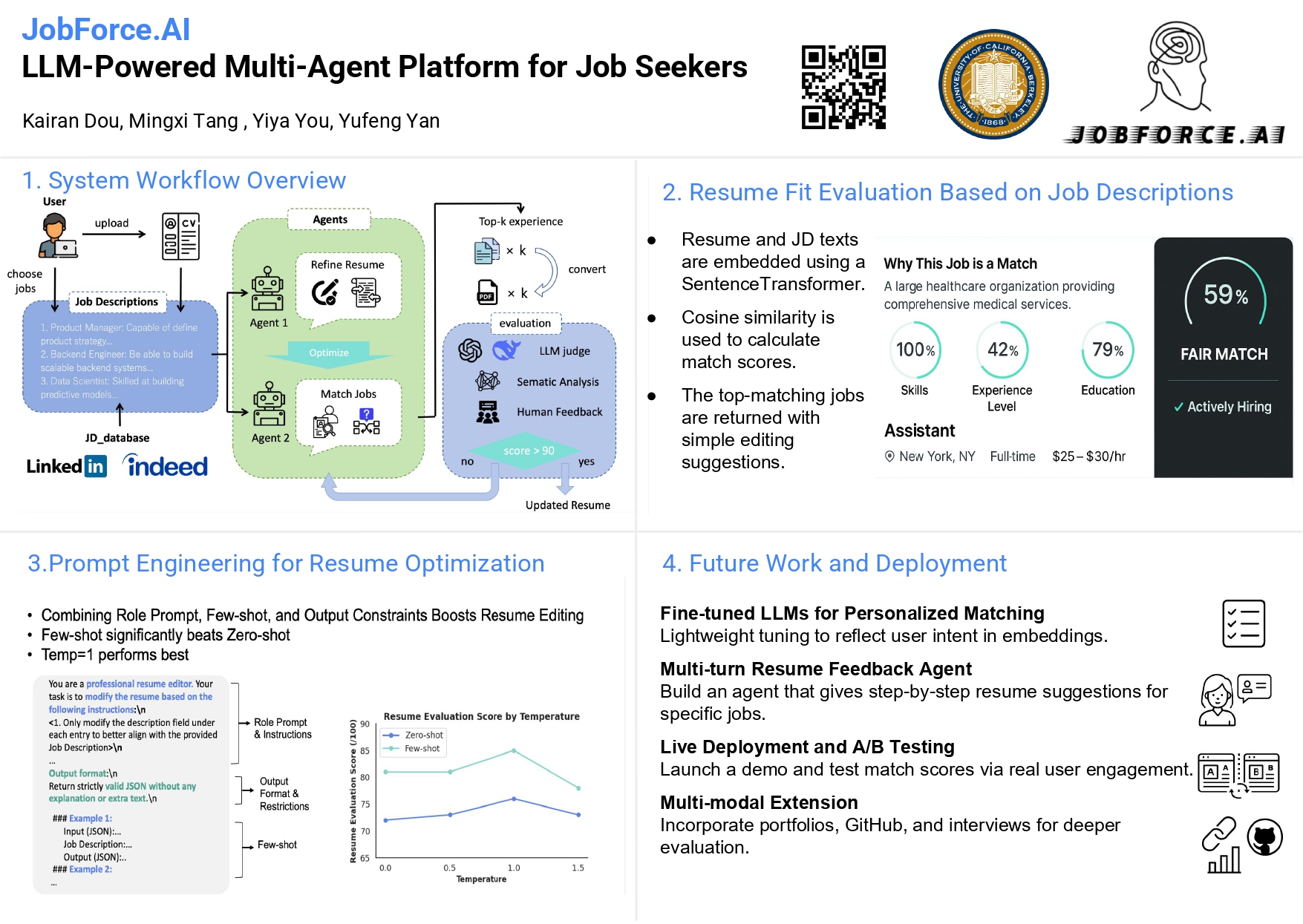

JobForce.AI

LLM-Powered Multi-Agent Platform for Job Seekers

Kairan Dou, Mingxi Tang , Yiya You, Yufeng Yan

- End-to-end LLM-powered pipeline automates both resume rewriting and job matching in a single workflow.

- Context-aware resume optimization tailors each experience using representative job descriptions and LLM-generated language.

- Semantic embedding–driven selection ranks and condenses content based on normalized cosine similarity for maximum relevance.

- Real-time, personalized job recommendations match optimized resumes against live postings filtered by user preferences.

- Quantitative alignment evaluation delivers a clear 100-point score and visual feedback on resume–JD fit improvements.

🏃♂️ Hobbies

- 🏸 Badminton: Men’s Singles and Doubles Champion of the College.

- 🎸 Guitar: Served as the vice president of the Guitar Club.

- 🎤 Singing: Recognized as one of the top ten singers in the college.